1,环境安装

不懂得自己百度一下找教程。不在叙述。下面是笔记

环境。WIN10LTS,GTX750TI

建议不好用CPU,时间太漫长。翻箱底翻了个

conda config --add channels http://mirrors.tuna.tsinghua.edu.cn/anaconda/pkgs/free/ conda config --add channels http://mirrors.tuna.tsinghua.edu.cn/anaconda/pkgs/main/ conda config --add channels http://mirrors.tuna.tsinghua.edu.cn/anaconda/cloud/pytorch/ conda config --add channels http://mirrors.tuna.tsinghua.edu.cn/anaconda/cloud/conda-forge/ conda config --add channels http://mirrors.tuna.tsinghua.edu.cn/anaconda/cloud/msys2/ conda config --add channels http://mirrors.tuna.tsinghua.edu.cn/anaconda/cloud/bioconda/ conda config --add channels http://mirrors.tuna.tsinghua.edu.cn/anaconda/cloud/menpo/ conda config --set show_channel_urls yes conda config --add channels https://mirrors.tuna.tsinghua.edu.cn/anaconda/pkgs/free/ conda config --add channels https://mirrors.tuna.tsinghua.edu.cn/anaconda/cloud/conda-forge conda config --add channels https://mirrors.tuna.tsinghua.edu.cn/anaconda/cloud/msys2/ conda config --add channels https://mirrors.tuna.tsinghua.edu.cn/anaconda/cloud/pytorch/ conda config --set show_channel_urls yes

conda create -n yolov5 python==3.8

询问是否下载,输入y

activate yolov5

cd yolov5

查看cuda版本,下载相应版本pytroch和cudnn

import torch

torch.cuda.is_available()

conda env remove -n yolov5_cuda10.2

验证CUDA是否可用

import torch

torch.cuda.is_available()

conda env remove -n yolov5_cuda10.2

版本必须对于上。离线本地安装

pip install torch-2.1.1+cu121-cp38-cp38-win_amd64.whl

pip install torchvision-0.16.1+cpu-cp38-cp38-win_amd64.whl

pip install torchvision==0.16.1 –index-url https://download.pytorch.org/whl/cu121

运行例子缺少组件加这个

import os

os.environ[“GIT_PYTHON_REFRESH”] = “quiet”

使用labelimg标记图片

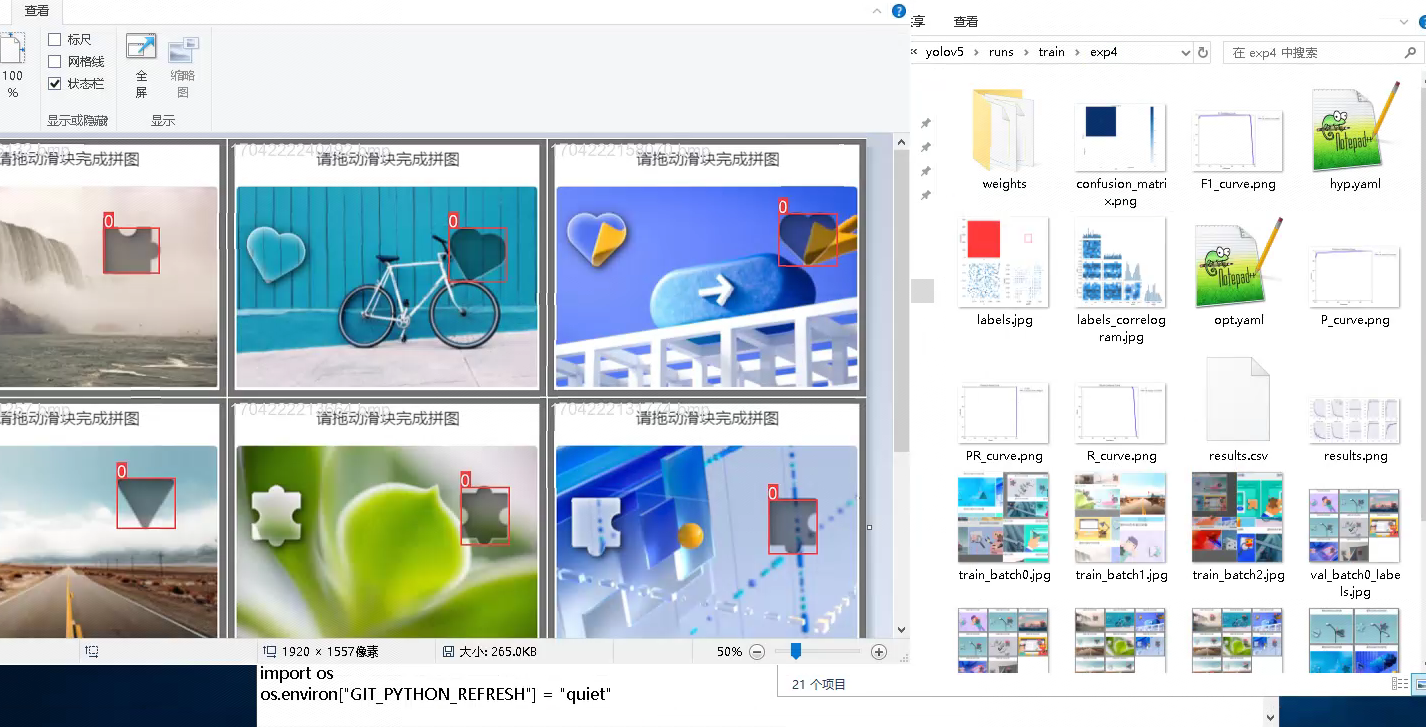

导出为YOLO得格式。我这里用了初略200个试了下效果可以。后面增加到500个。精度很高了

训练

python train.py –weights yolov5s.pt –batch-size=4 –epochs 40 –data=./data/sf.yaml

- path: C:\Users\Administrator\yolov5\data\sf

- train: C:\Users\Administrator\yolov5\data\sf\train # train images (relative to 'path') 128 images

- val: C:\Users\Administrator\yolov5\data\sf\val # val images (relative to 'path') 128 images

- test:

-

- # Classes

- names:

- 0: 0

查看验证集结果,效果很好,都很精确

部署FLASK接口供其他程序使用

import argparse

import csv

import os

import platform

import sys

from pathlib import Path

import numpy as np

import torch

FILE = Path(__file__).resolve()

ROOT = FILE.parents[0] # YOLOv5 root directory

if str(ROOT) not in sys.path:

sys.path.append(str(ROOT)) # add ROOT to PATH

ROOT = Path(os.path.relpath(ROOT, Path.cwd())) # relative

from ultralytics.utils.plotting import Annotator, colors, save_one_box

from models.common import DetectMultiBackend

from utils.dataloaders import IMG_FORMATS, VID_FORMATS, LoadImages, LoadScreenshots, LoadStreams

from utils.general import (LOGGER, Profile, check_file, check_img_size, check_imshow, check_requirements, colorstr, cv2,

increment_path, non_max_suppression, print_args, scale_boxes, strip_optimizer, xyxy2xywh)

from utils.torch_utils import select_device, smart_inference_mode

from models.experimental import attempt_load

import base64

from flask import Flask, request, Response,render_template

import json

import cv2

import time

from PIL import Image

app = Flask(__name__)

UPLOAD_FOLDER = r'./uploads'

app.config['UPLOAD_FOLDER'] = UPLOAD_FOLDER

def base64_to_image(base64_code):

# base64解码

img_data = base64.b64decode(base64_code)

# 转换为np数组

img_array = np.fromstring(img_data, np.uint8)

# 转换成opencv可用格式

image_base64_dec = cv2.imdecode(img_array, cv2.COLOR_RGB2BGR)

return image_base64_dec

def redirect(source):

#source = 'C:/Users/Administrator/yolov5/data/images/1704215184961.bmp',

stride = int(model.stride.max())

imgsz = check_img_size(640, s=stride)

dataset = LoadImages(source)

#seen, windows, dt = 0, [], (Profile(), Profile(), Profile())

for path, im, im0s, vid_cap, s in dataset:

im = torch.from_numpy(im).to(device)

im = im.half() if model.half else im.float() # uint8 to fp16/32

im /= 255 # 0 - 255 to 0.0 - 1.0

if len(im.shape) == 3:

im = im[None] # expand for batch dim

pred = model(im, augment=False, visualize=False)

pred = non_max_suppression(pred, conf_thres, iou_thres, None, False, max_det=1000)

seen = 0

for i, det in enumerate(pred): # per image

seen += 1

p, im0, frame = path, im0s.copy(), getattr(dataset, 'frame', 0)

if len(det):

det[:, :4] = scale_boxes(im.shape[2:], det[:, :4], im0.shape).round()

for *xyxy, conf, cls in reversed(det):

c1, c2 = (int(xyxy[0]), int(xyxy[1])), (int(xyxy[2]), int(xyxy[3]))

#print("左上点的坐标为:(" + str(c1[0]) + "," + str(c1[1]) + "),右下点的坐标为(" + str(c2[0]) + "," + str(c2[1]) + ")")

print("识别坐标为:" +str(c1[0]))

return str(c1[0])

return

def allowed_file(filename):

return '.' in filename and filename.rsplit('.', 1)[1] in ALLOWED_EXTENSIONS

@app.route('/detect', methods=['POST'])

def detect():

file = request.files['file']

src_path = os.path.join(app.config['UPLOAD_FOLDER'], file.filename)

file.save(src_path)

#source = src_path

print(src_path)

results = redirect(src_path)

return results

# do something with the results

#return jsonify({'result': results})

if __name__ == "__main__":

#opt=parse_opt()

#run(**vars(opt))

device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

model = attempt_load(ROOT / 'yolov5s.pt')

model.to(device).eval()

model.half()

conf_thres = 0.65 # NMS置信度

iou_thres = 0.45 # IOU阈值

#print(opt)

app.run(host='0.0.0.0', port=5001, debug=True)

最终效果

返回缺口坐标,很好,收工。

一些参考连接

https://blog.csdn.net/m0_58892312/article/details/120923608

https://blog.csdn.net/ml1211449109/article/details/119811567

https://blog.csdn.net/weixin_52797278/article/details/125427898

https://zhuanlan.zhihu.com/p/513967538

https://blog.csdn.net/wangzhuanjia/article/details/125278833